Quite some time back I found myself fighting with an Execution Plan generated by SQL Server for one of my stored procedures. The execution plan always returned an estimated row of “1” when processing for the current day. I won’t go into details on why this one specific stored procedure didn’t use the older cached plans as expected. I will however tell you like most things with SQL Server there is more than one way to solve a problem ![]() .

.

This method is something I have personally wanted to blog because it’s something I have only used a handful of times when I just couldn’t get the execution plan to work the way I wanted it to. Note that using this hint we are forcing the SQL Server Optimizer to use the statistics for the specific variable value that we provide. However if the table was to grow significantly in the future we may be hurting performance by forcing a bad execution plan and that is a drawback to using this hint, so now you know!

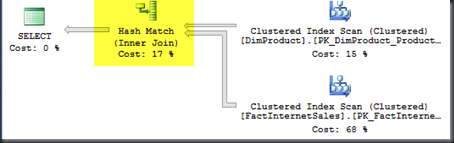

Take a look at the two screenshots below. The first is the estimated rows from the Fact Internet Sales table and the second is the estimated execution plan.

What I actually want to see for this execution plan is HASH MATCH. This will perform significantly better for the number of records that I will have. Unfortunately due to out of date statistics I’m getting a bad plan.

So let’s note two things.

- First, in most situations the best solution here is to simply update statistics. This should be part of ANY database maintenance plan.

Let’s take a look at the original query:

DECLARE @ShipDate DATE = '1/1/2008' SELECT [EnglishProductName] AS Product ,[SalesOrderNumber] ,[OrderDate] ,[DueDate] ,[ShipDate] FROM [dbo].[FactInternetSales_Backup] FIS JOIN [dbo].[DimProduct] DP ON DP.ProductKey = FIS.ProductKey WHERE ShipDate > @ShipDate

Now we are going to modify this query quickly to use the Optimize For hint. This hint is going to allow us to optimize our Execution Plan in SQL Server using the specified parameter. In my instance this is going to be a previous date where I know the statistics are reflective of what I want to see in my execution plan.

Here is the modified query:

DECLARE @ShipDate DATE = '1/1/2008' SELECT [EnglishProductName] AS Product ,[SalesOrderNumber] ,[OrderDate] ,[DueDate] ,[ShipDate] FROM [dbo].[FactInternetSales_Backup] FIS JOIN [dbo].[DimProduct] DP ON DP.ProductKey = FIS.ProductKey WHERE ShipDate > @ShipDate OPTION (OPTIMIZE FOR (@ShipDate = '1/1/2005')) GO

In this query the result set returned will still be for the original value of the variable “1/1/2008’. However the SQL Server optimizer is going to generate the plan using the OPTIMIZE FOR hint that we provided. (Highlighted in Yellow).

Now let’s take a look at our new Estimated Execution plan:

This time we are getting a Hash Match which is much more applicable for our table and the number of records that will be queried.

As always, Thanks ![]()